As artificial intelligence (AI) systems continue to evolve, their impact on our lives becomes increasingly significant. Together with Airi Lampinen, Kristina Popova, and Rachael Garrett, Kristina Höök, Professor at KTH Royal Institute of Technology, is at the forefront of studying human interaction with AI-powered drones through an ethical lens.

In the WASP-HS project Ethics as Enacted Through Movement – Shaping and Being Shaped by Autonomous Systems, Kristina Höök, Airi Lampinen, Kristina Popova, and Rachael Garrett, at KTH Royal Institute of Technology and Stockholm University, investigate how humans interact with AI drones, focusing specifically on the expression of ethics in design practices.

“When humans interact and cooperate with drones and other autonomous systems, they both shape these machines and are shaped by them. For instance, people may change their ways of moving or gesturing to make the interaction work,” says Kristina Höök.

AI Lacks Ethical Awareness

Although AI drones can respond to human gestures and perform various tasks, Kristina Höök notes that essential elements of aesthetic and ethical expressions are absent in interactions with drones.

“Complex ethical issues can be felt and experienced by both users and designers of technology. This sense of shared understanding is something a drone cannot comprehend,” she explains.

The design processes that the research group works with have ethical risks and vulnerabilities. They purposefully place themselves in these risky situations in order to arrive at viable designs.

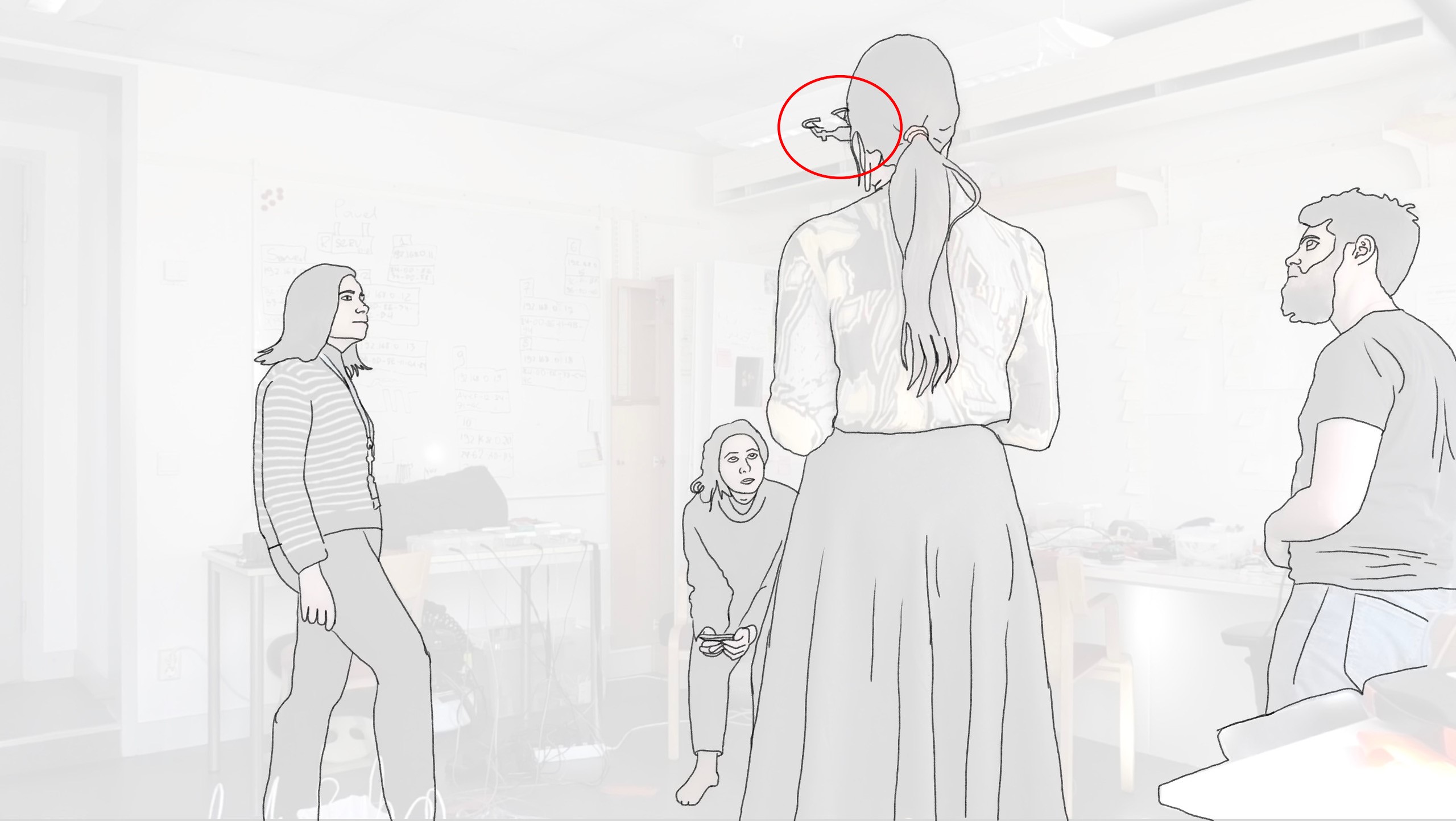

One example of such a risky situation takes place seconds after the image (see above) was captured. The drone is hovering right in front of one of the participants, then moves towards the woman to the left, crashing into her hair. The woman was not injured, but it was a scary event for the design team.

“These situations have to be dealt with through ‘felt ethics’ to repair the damage and trust within the design team. This is an example of a situation in which ethical awareness is required” she says.

Often, people feel if something is right or wrong and recognize that others in the room feel the same way. Such unspoken bodily understanding is still beyond today’s drones’ capabilities. Kristina Höök adds that while it is well-known that human communication extends far beyond words, this concept is often overlooked in scientific discussions about ethics and technology.

AI Through the Lens of Felt and Bodily Experience

Höök’s research introduces new perspectives for considering the ethics of technology and AI, aiming to shift the focus away from questions about AI “taking over the world.” Instead, the research group’s joint work encourages us to consider how AI shapes our lived and felt experiences and the types of norms and practices it reinforces.

“What are the felt experiences of AI? Why do people describe it with terms like ‘soulless,’ ‘disembodied,’ ‘biased,’ or ‘hallucinating’? These words tell us a lot about the emotional and experiential gaps that current AI design might be missing. We need to ask ourselves: What aspects of human experience and understanding are absent in our approach to designing AI?” Kristina Höök asks.

More About Felt Ethics

For more information on the research project Ethics as Enacted Through Movement – Shaping and Being Shaped by Autonomous Systems, please visit the WASP-HS website.

Academic Sources

Popova, K., Garrett, R., Núñez-Pacheco, C., Lampinen, A., & Höök, K. (2022, April). Vulnerability as an ethical stance in soma design processes. In Proceedings of the 2022 CHI conference on human factors in computing systems (pp. 1-13).

Garrett, R., Popova, K., Núñez-Pacheco, C., Asgeirsdottir, T., Lampinen, A., & Höök, K. (2023, April). Felt Ethics: Cultivating Ethical Sensibility in Design Practice. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (pp. 1-15).

Popova, K., Figueras, C., Höök, K., & Lampinen, A. (2024). Who Should Act? Distancing and Vulnerability in Technology Practitioners’ Accounts of Ethical Responsibility. Proceedings of the ACM on Human-Computer Interaction, 8(CSCW1), 1-27.