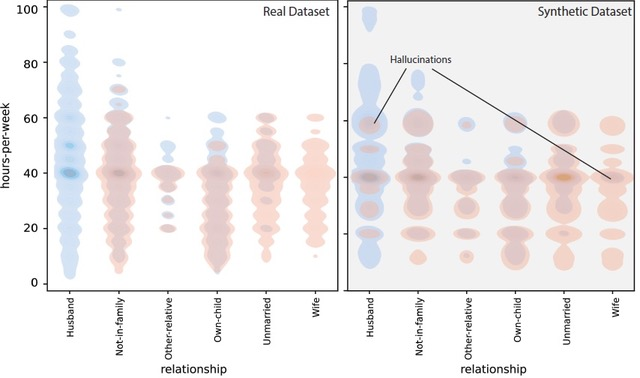

Synthetic data is increasingly used in research and development. In the WASP‑HS project “Social Complexity and Fairness in Synthetic Medical Data,” Ericka Johnson identified intersectional hallucinations and has now received additional funding from the Wallenberg Foundations to develop tools that detect and visualize these shifts in data.

What happens when data changes in ways users do not notice? These hidden shifts can lead to misunderstandings and wrong conclusions. This is especially common with synthetic data — data generated artificially from an existing dataset.

At the end of last year, Ericka Johnson, Professor of Gender and Society at Linköping University, received a Proof of Concept grant from the Wallenberg Foundations, to further the work she and colleagues did in the WASP‑HS project “Social Complexity and Fairness in Synthetic Medical Data”. The grant supports early-stage development of new methods, products, or processes.

“In our work we studied how edge cases appear in synthetic data and discovered what they call intersectional hallucinations. These occur when systems accidentally combine traits that do not logically fit together. For example: an ‘unmarried husband’ or ‘15-year-old university students,’ says Ericka Johnson.

Visualizing Significant Changes

“If people using synthetic data do not realize that the structure and meaning of their data changed during the process of creating it, they might misuse it or draw the wrong conclusions.”

Training a university enrollment AI on data showing many 15‑year‑olds studying at university would lead the system to learn a distorted reality. To help detect and visualize such serious errors, Ericka Johnson has received a Proof of Concept grant from the Wallenberg Foundations. The grant supports early‑stage development of new methods, products, or processes for researchers funded through Wallenberg strategic programs.

The PoC project consists of three work packages developing tools to detect and visualize changes introduced by synthetic data, including intersectional hallucinations. The tools will be deployable in users’ own environments to avoid exposing sensitive data, and will provide clear, reliable metrics for assessing privacy impacts of synthetic data generation.

“The goal is to make synthetic data more transparent and to support researchers, public authorities, and companies in using it more safely and accurately,” adds Ericka.

More About Intersectional Hallucinations

For more information about intersectional hallucinations and the tools being developed to identify them, please contact Ericka Johnson, Professor of Technology and Society at Linköping University, at ericka.johnson@liu.se.

Please also read the latest publication from her and her colleagues’ work funded by WASP-HS: “The Ontological Politics of Synthetic Data: Normalities, Outliers, and Intersectional Hallucinations”.