WASP-HS affiliated PhD student, Erik Campano, shares his experiences of attending the joint WASP and WASP-HS course Ethical, Legal, and Societal Aspects of Artificial Intelligence and Autonomous Systems.

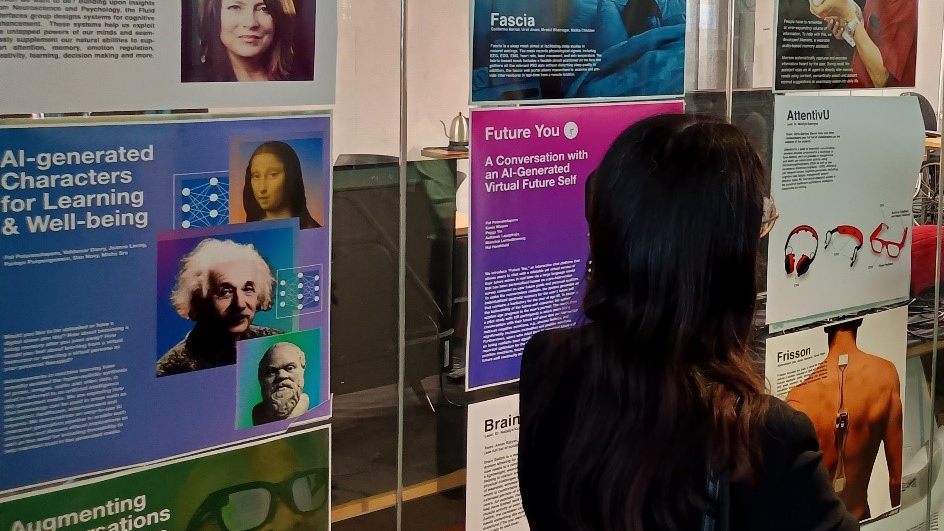

During this week’s WASP course, “Ethical, Legal, and Societal Aspects of Artificial Intelligence and Autonomous Systems”, we doctoral researchers spent the whole 2nd day working on an interesting lesson with the admittedly wieldy name of Protostrategos. Our instructors based the exercise strongly on a fictional case study from Princeton University’s Dialogues in Artificial Intelligence and Ethics. In this case study, a group of programmers with a military background found a company (called Strategion by Princeton) and then develop artificially intelligent software to help automate the hiring process for that company. But the software has a problem: it discriminates against candidates that do not play sports. The problem has its origins in the way that the artificial intelligence module was trained; the original training data set – made up of people that the company already hired – had a high proportion of athletes, as most of the candidates were from the military. An applicant (named Hermann in the original Princeton case and Helena in the WASP case) who is otherwise qualified for a job is rejected by the system, because he or she does not play sports. The automated hiring system, because it was trained on athletes, assumed that being sporty was necessary for being a good fit for the company. In the WASP version of this case, Helena also was disabled, so “not playing sports” became a proxy variable for “being disabled”. In short: the artificially intelligent hiring system ended up discriminating against people with disabilities.

Hermann/Helena writes a blog post publicizing this hiring practice, and files a complaint with the company accusing it of unfairness, illegal discrimination, and inappropriate use of public information. The company then goes into crisis mode, putting its legal team on the question of whether Protstategos/Strategion violated anti-discrimination and European GDPR laws, as well as whether it was illegal to train the hiring system on employees’ resumes without asking their consent first. The company also takes a long, hard look at whether or not it fundamentally failed its own commitment to “honest tech solutions” in designing and implementing the system.

At this point, in the WASP version of the case, the company hires a group of doctoral researchers to do an external review of what happened. So, the approximately one-hundred students in the WASP course were divided into groups of approximately ten. Each of us in these groups took the role of a fictional stakeholder: European Commission advisor, the company’s Chief Technical Officer, Product Manager, Data Protection Officer, Software Developer, Human Resources Recruiter, Clients (that is to say, companies buying Protostrategos’ hiring system), and citizen activists advocating for sometimes marginalized groups such as people with disabilities. We were then given a series of provocative questions about the case, which we were meant to debate from the point of view of the person in their role. I was the European Commission advisor, so my job was to moderate the discussions, without enforcing my view. This was a fun role to play, because I could observe the debate from above, without having to take a side.

The discussion within our group was lively. Should Protostrategos try to improve the hiring software, or just scrap it? Was the company right in disclosing the software’s discriminatory nature to Helena? Perhaps Protostrategos should have covered it up and simply offered her an interview. Does the company need to get the consent of its employees before using their data to train the system, and if so, is Protostrategos required to scrap its model and train it all over again? How much was this company in the wrong, both legally and ethically? And what role should values play in the company’s decision-making… such as the seven values from the European Commission’s Guidelines for Trustworthy AI, which include human agency and oversight; technical robustness and safety; privacy and data governance; transparency; diversity, non-discrimination, and fairness; societal and environmental well-being; and accountability.

The brilliance of this particular exercise was in the way it engaged students by having them play roles in a fictional artificial intelligence ethics scenario. When we evaluate a morally and legally thorny case like this, we can be quick to impose our usual standpoints and prejudices. For example, I tend to think that most artificial intelligence ethicists overstate the importance of software transparency, that is to say, I have a bias in favor of permitting black boxes compared to the mainstream opinion on the issue. So, in looking at this user case, I am unlikely at first to argue that it matters whether or not we understand the precise logical steps the hiring system takes in deciding whether to reject an applicant. However, by being forced to see the case from a particular stakeholder’s point of view – say, the Chief Technical Officer – I can understand the argument that this system needs to be transparent. Otherwise, that officer cannot reverse-engineer the computer’s learning process and give the company a clear answer to Helena about why she was rejected.

Playing these roles reminds us that sometimes people’s values with regards to artificial intelligence are the product of their job, rather than emerge from objective reflection, untainted by day-to-day concerns, about ethics and law. I would like to think that my opinions about artificial intelligence in society derive from the latter. Pondering artificial intelligence ethics has been my full-time job for some three years now, so I would like to think that I have considered this topic from lots of angles. However, what I learned from this course is that it is always a healthy practice to step back from one’s particular opinions, try to adopt the role of a different stakeholder, and see artificial intelligence through that person’s eyes temporarily. This involves a kind of humility, one that perhaps that the leaders of Strategion/Protostrategos failed to embrace when they first proposed using their own software to speed up the process of hiring people into their firm.