In 1987 Robert Kraut, then a Bell Labs scientist, asked whether and how technology can be introduced “to exploit its usefulness without exploiting its users”. More than three decades on, we continue to wonder how to intervene in the continually evolving data-extractive software infrastructures to exploit their usefulness while preventing them from exploiting their users. Most recently this has become evident in the debates around data and artificial intelligence (AI) system development. There is a proliferation of debates, policies, principles and guidelines that attempt to critique the existing technological world order and propose alternative conceptions of goodness that ought to drive technology development in the future as well as reconfigure behaviour of commercial companies and other organizations now. Scholars have taken note of this proliferation, providing analyses of manifestos, guidelines and principles, as well as producing substantive reviews of this burgeoning literature. What is less clear is how to move from the abstractions of ethical concern into the particularities of technology development as practice.

In the “Operationalising Ethics for AI” project, co-PIs Katherine Harrison & Irina Shklovski aim to address this gap, focusing on explainability and synthetic data as two approaches, touted as potential solutions to some of the above problems.

What is Synthetic Data?

Synthetic data is the latest buzzword to polarise not only the AI/ML (artificial intelligence/machine learning) community, but also scholars from the humanities and social sciences who draw attention to the ethical and societal consequences of such developments. Synthetic data are data generated using purpose-built mathematical models or algorithms instead of being extracted from existing digital systems or produced through particular forms of data collection. The promise of it is huge; for some, synthetic data represents a “magic sauce” that will address concerns about data privacy, scarcity and bias. For others, the potential downsides resulting from a lack of transparency around how these data are generated are potentially problematic, while the claims of “solving” the privacy and bias problems are as yet to be confirmed.

Workshops to Explore Context Specific Challenges

We find that the effects of synthetic data can only truly be understood when considered in specific application areas. To address this, the “Operationalising Ethics for AI” project has been running a series of interdisciplinary workshops during this academic year focusing on medicine, smart cities, social sciences and census data as key areas with very different requirements, expectations, and concerns about synthetic data. Our goal with this series is to connect context-specific challenges with the technical development of this tool to mitigate some of the hype surrounding synthetic data.

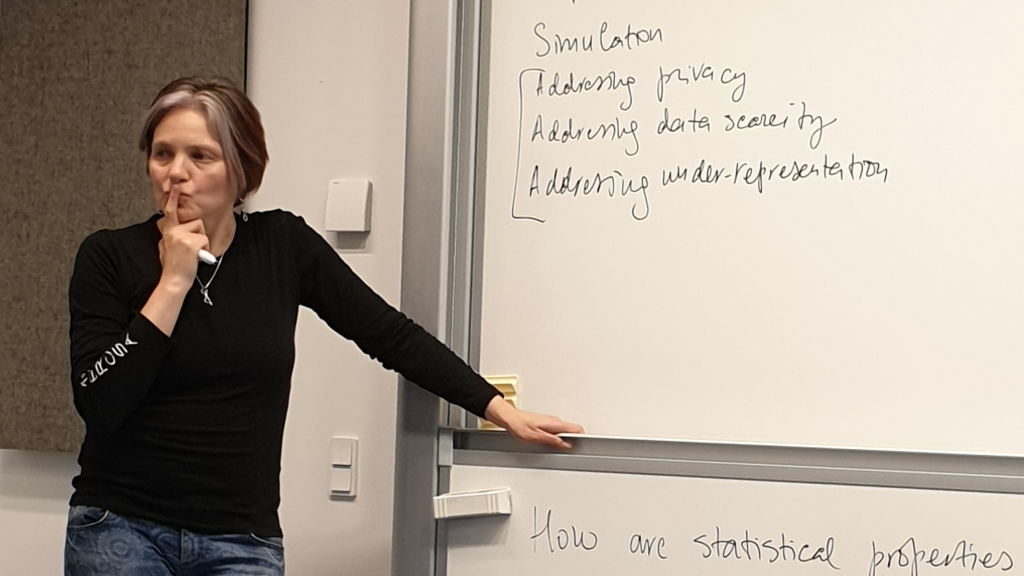

During the fall term 2023, we convened two workshops focusing on medicine and the smart city. The first of these concerned challenges in the medical domain and took place at the University of Copenhagen. This workshop attracted participants from six universities in Sweden and Denmark, across different faculties including law, computer science, health sciences, medicine, communication studies, political sciences, gender studies and STS. The workshop was organized as a series of round-table discussions to enable participants to explore the topic. Participants agreed that synthetic data production techniques are able to generate good, controllable datasets, but discussed whether such techniques can address the deep concerns about personal data leakage and re-identification in medical datasets. Researchers from computer science and health sciences shared how their research shows that the hype currently overstates the capacity of current methods to produce synthetic datasets that are provably and enduringly privacy-preserving while retaining utility. They noted that there is not enough discussion as of yet around good governance strategies for synthetic data in the medical domain.

Whilst the workshop exploring use in the medical domain focused on issues such as the scarcity of medical data caused by privacy concerns and the time-intensive work of annotating medical images, the smart cities and digital twins workshop (held in collaboration with Linköping University’s TEMA DATA LAB) was more future-oriented. Here the participants explored the promise of synthetic data as a way to model and plan for future scenarios when commercial restrictions on data sharing limit the availability or the diversity of data. In this workshop, Dr. Gleb Papyshev, (Hong Kong University of Science and Technology), presented his work on Virtual Singapore. Participants were then invited to work in groups to formulate questions for an expert panel comprising Prof. Ericka Johnson (Dept of Thematic Studies – Gender Studies, Linköping University), Prof. Vangelis Angelakis (Dept. of Science & Technology, Linköping University) and Merja Penttinen (Principal Scientist, VTT Technical Research Centre of Finland). While Merja Penttinen pointed out that synthetic data driven simulations can help predict urban situations that require more preparation, Ericka Johnson noted how urban simulations must take care not to assume full capacity for individual agency in social environments where people are governed, coerced and nudged into behaviour. Vangelis Angelakis added a further warning note that synthetic data derived from datasets obtained in urban environments require close scrutiny of the validity of the original data set in relation to ethical and societal “blind spots”.

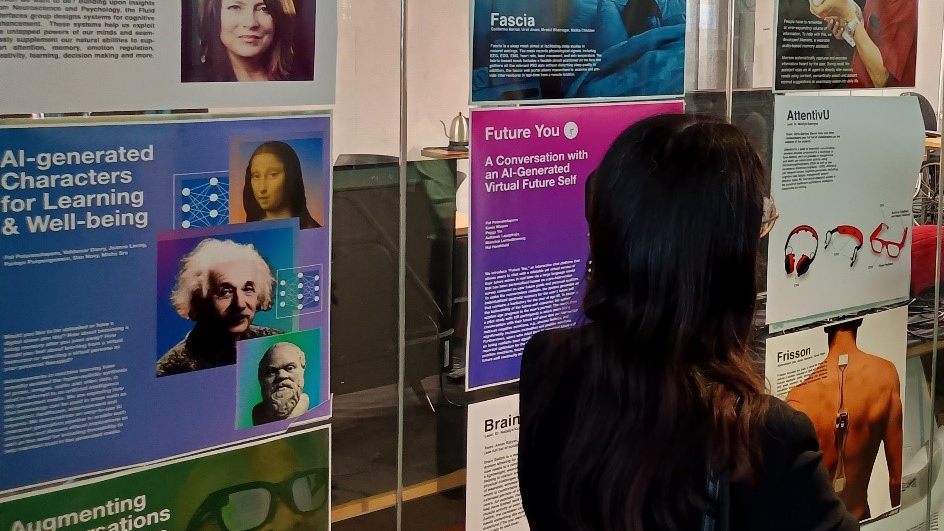

In the Spring term, we turned our focus to how synthetic data might be used for qualitative research. Taking a recent Science article titled “AI and the transformation of social science research” as provocation, this workshop produced lively discussion concerning both what has been done in social sciences so far in terms of using Large Language Models (LLMs) for qualitative research, but also what possibilities remain to be explored. Here the promise of producing more diverse data sets by using tools such as ChatGPT to deliver interview transcripts or focus group materials to address gaps in existing data sets met with concerns about whether the materials could be considered authentic enough to be legitimate. The group also considered areas where these tools might be useful: Is it ever acceptable, for example, to use AI to produce diverse interviewer avatars if it increases inclusivity in the study by attracting/engaging a more diverse group of real participants? As the above suggests, trade-offs between ethics and pragmatics were a recurring theme throughout the series.

Workshop on Synthetic Data for Census

We are now preparing for a final workshop in this series on June 10th. This workshop will explore the context of synthetic data for census.

Census data represent a social contract between citizen and state, with the collection, use and management of these kind of data reflecting spatial and temporal specificities of individual nation states’ policies and practices. Synthetic data, through older techniques of statistical disclosure control, have long been used to guarantee confidentiality as a key part of this contract. New techniques such as differential privacy are changing how state statistics data are prepared and released, challenging common expectations of what such data represent and of their connection to reality. In this workshop, we ask:

- What kinds of knowledge do state statistics/census data promise? How are/were these affected through the use of statistical disclosure control techniques?

- What are the impacts of new techniques to produce synthetic census data?

- Can the trajectory of synthetic data within the census domain give us some insights into what we may see evolving in other contexts of application?

This event involves an in-person visit to the “Sweden in Numbers” exhibit at Norrköping’s “Visualiseringscenter”, followed by a hybrid workshop including presentation by Os Keyes (University of Washington) of the pseudopeople project.

More About Synthetic Data

Would you like to know more about our work on synthetic data? Here are two opportunities coming up!

- For those attending the WASP4ALL conference on 23 May, Irina Shklovski will be talking about “Synthetic data for AI – still human-centered?” as part of the afternoon panel discussion.

- Registration is now open for the census data workshop: June 10, 13:15-16:00 at Campus Norrköping, Linköping University or online (zoom link forthcoming to registered participants). Please send an email indicating your interest to Satenik Sargsyan (satenik.sargsyan@liu.se ) by 3 June.